AI & ML

A Case Study On Abusive Text Detection with Advanced AI System

By Next Solution Lab

12:12:30

2024-07-02

Connecting with a diverse network is essential for professional and personal growth. Unfortunately, some interactions can be harmful. Abusive text detection uses AI to filter harmful language in online communications. These systems employ machine learning and natural language processing (NLP) to detect harassment, hate speech, threats, and offensive language. This technology ensures safe and respectful online environments by monitoring and moderating user-generated content on social media, forums, chat apps, and other online spaces.

Highlight

- Utilizing sophisticated AI systems like ChatGPT for detecting abusive text.

- Identifies various forms of abuse, including harassment, hate speech, threats, and offensive language.

- Monitors and moderates user-generated content across social media platforms, forums, chat applications, and other online spaces.

Challenge

The primary challenge lies in the need for contextual understanding, as abusive language can be subtle and context-dependent. Sarcasm, irony, and cultural nuances can make it difficult for AI systems to accurately detect abuse. This requires a deep understanding of context. Additionally, slang and abusive language evolve rapidly, posing a constant challenge for AI systems to maintain accuracy in detection. Balancing precision and recall is critical to minimize errors. AI systems must avoid incorrectly flagging non-abusive content as abusive while ensuring they don't miss actual abusive content. Training data can introduce biases, leading to unfair treatment of certain groups, necessitating unbiased training data and regular auditing to maintain fairness and equity. Furthermore, collecting and analyzing user data raises privacy concerns, requiring compliance with data protection regulations and maintaining user trust.

Solution

To enhance contextual understanding in abusive text detection, ChatGPT leverages sophisticated natural language processing techniques, enabling it to grasp the subtleties and nuances of conversations more effectively. This includes the ability to understand sarcasm, irony, and cultural nuances through the use of transformer-based models that analyze entire conversation threads rather than isolated messages. To improve accuracy and reduce bias, ChatGPT continuously learns from new data, updates, and user feedback. It adapts to evolving language trends and slang. Rigorous bias detection and mitigation techniques are employed during the training phase, with regular audits to maintain fairness and equity. Scalability is addressed through optimized algorithms that require fewer computational resources. Leveraging cloud-based infrastructure ensures efficient real-time processing of large data volumes.

Privacy and ethical considerations are managed through data anonymization, ensuring compliance with data protection regulations and safeguarding user privacy. Transparent and accountable systems, including clear guidelines and transparency reports on how abusive content is detected and moderated, help build user trust. Feedback mechanisms allow users to appeal decisions, ensuring accountability and fairness.

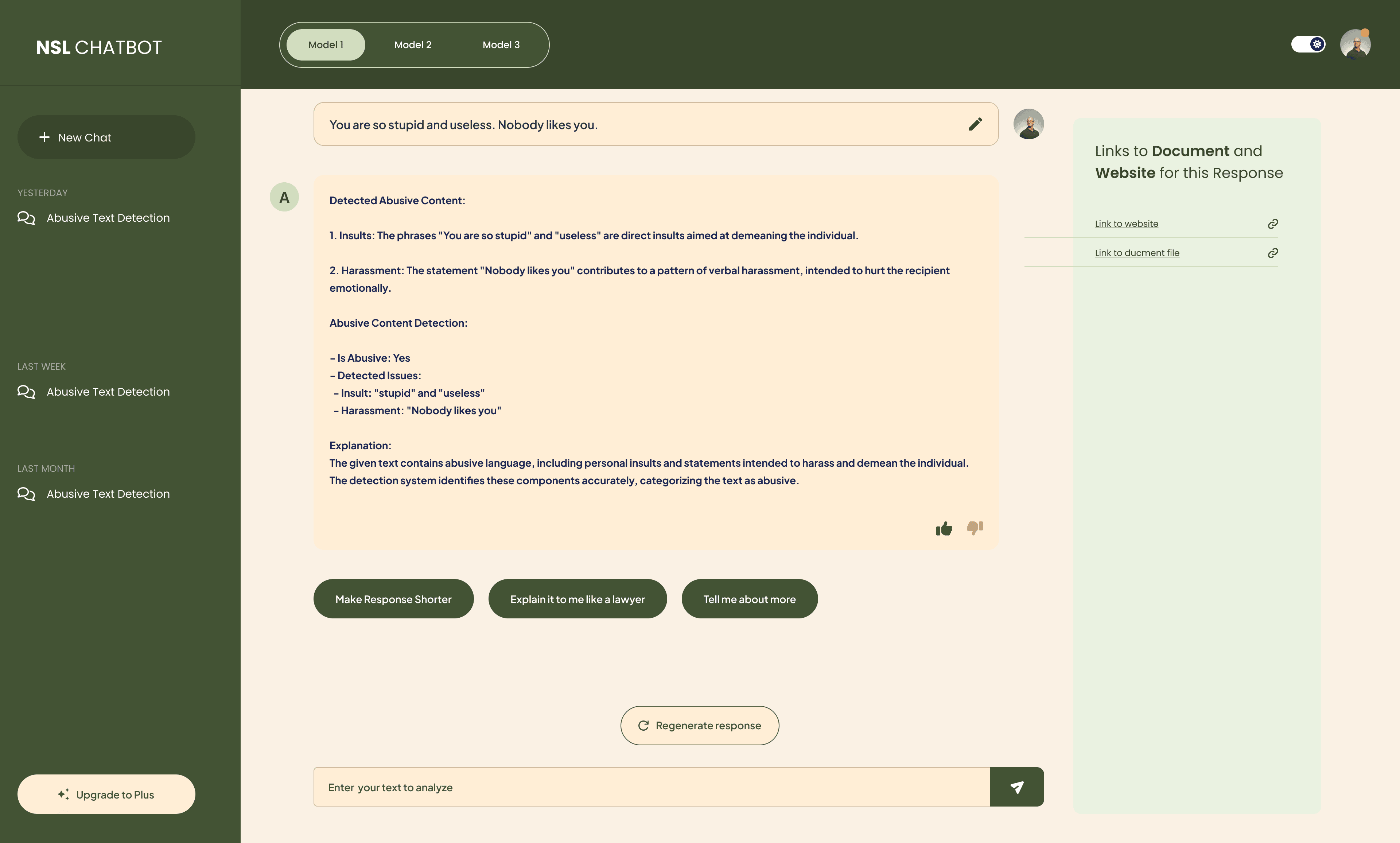

With ChatGPT API and our advanced prompt engineering our application can detect Abusive Text more accurately sensing nuance and subtle demean words or phrases.

Benefits

-

Enhanced Detection Accuracy:

By leveraging advanced NLP techniques and continuous learning, our AI system provides highly accurate detection of abusive text, reducing false positives and false negatives.

-

Bias Reduction:

Through rigorous bias detection and mitigation, our system ensures fairness and equity. It provides unbiased results across different user groups.

-

Customer Support Systems:

Detect abusive language directed at customer service agents in real-time, allowing for appropriate intervention but quality support.

-

Content Creation and Publishing:

Automatically moderate user comments on articles, blogs, and videos to filter out abusive language. This helps maintain constructive discussions.

-

Educational Platforms:

Monitor chat rooms, forums, and comment sections to prevent bullying. Ensure a positive, supportive learning environment for students.

Result

-

Accurate Detection:

Improved accuracy in detecting abusive text, resulting in safer digital environments.

-

Reduced Bias:

Ensures fair and unbiased detection across different user groups.

-

Scalable Solutions:

Efficient real-time processing of large data volumes, suitable for various online platforms.

-

Enhanced Privacy:

Compliance with data protection regulations and safeguarding of user privacy.

-

User Trust:

Increased user trust through transparent and accountable systems.

Let us know your interest

At Next Solution Lab, we are dedicated to creating advanced AI systems for detecting abusive text and ensuring a safe and respectful digital environment. If you are interested in learning more about how our projects can benefit your organization, please contact us.

Contact Us